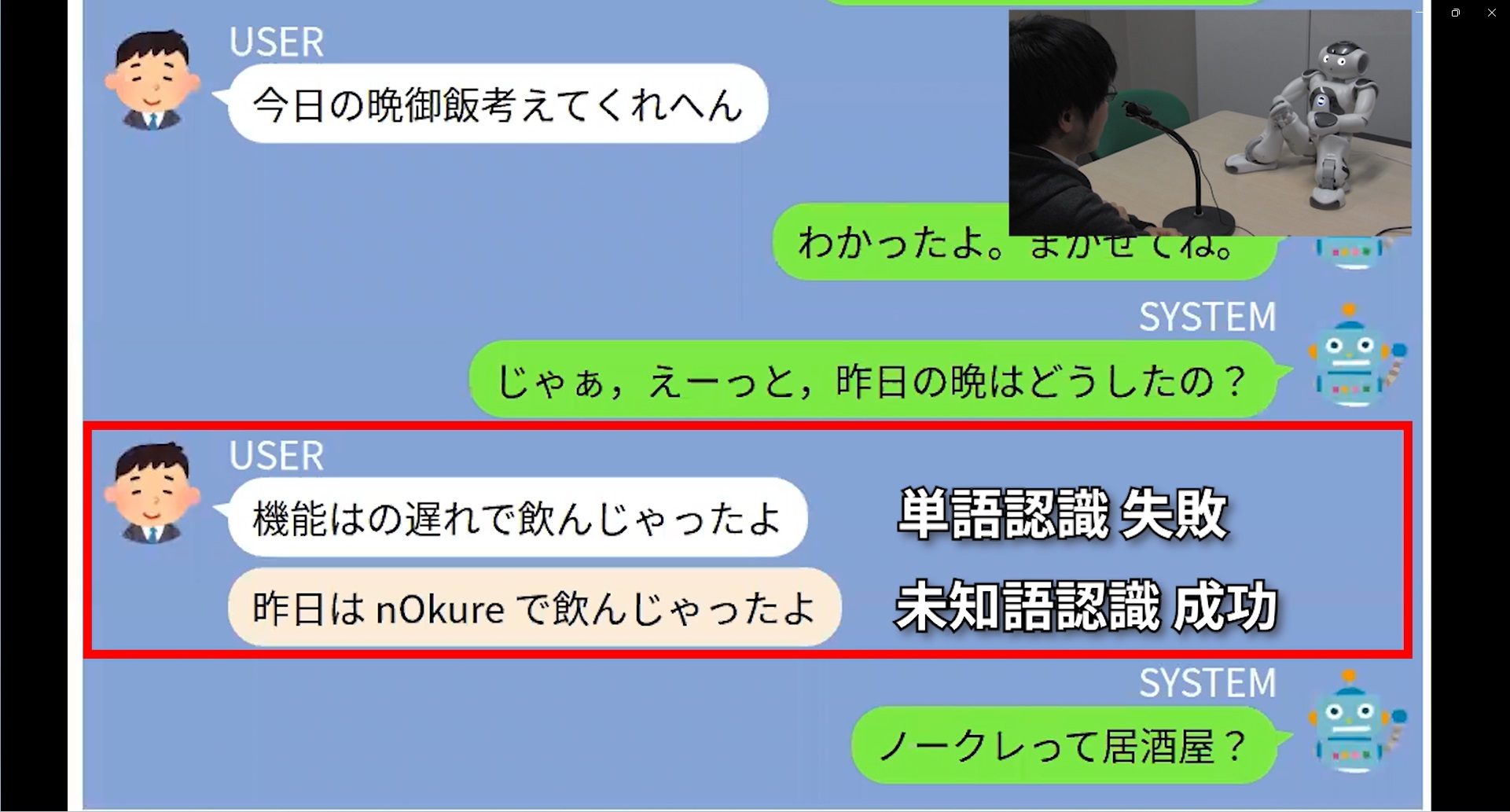

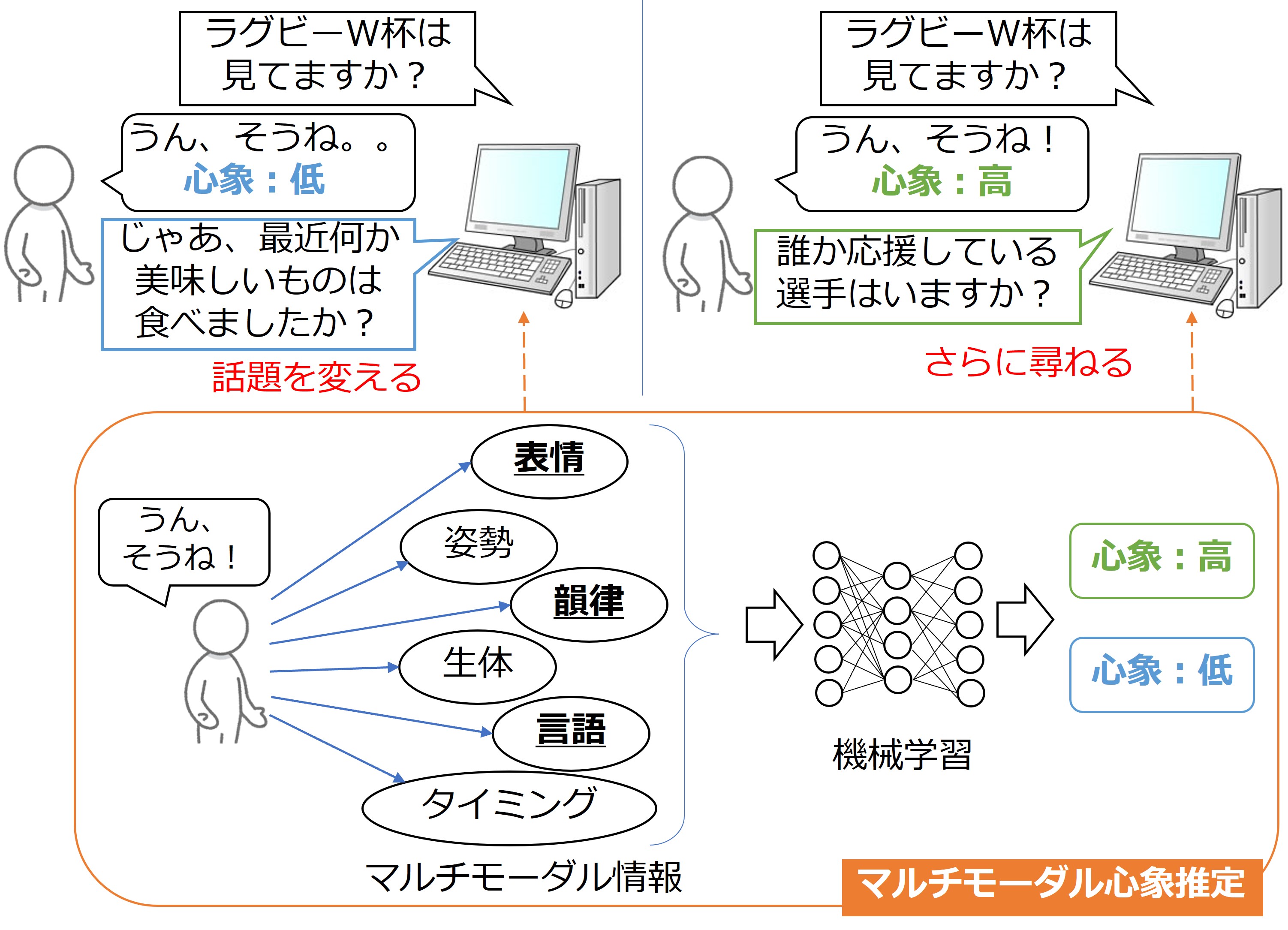

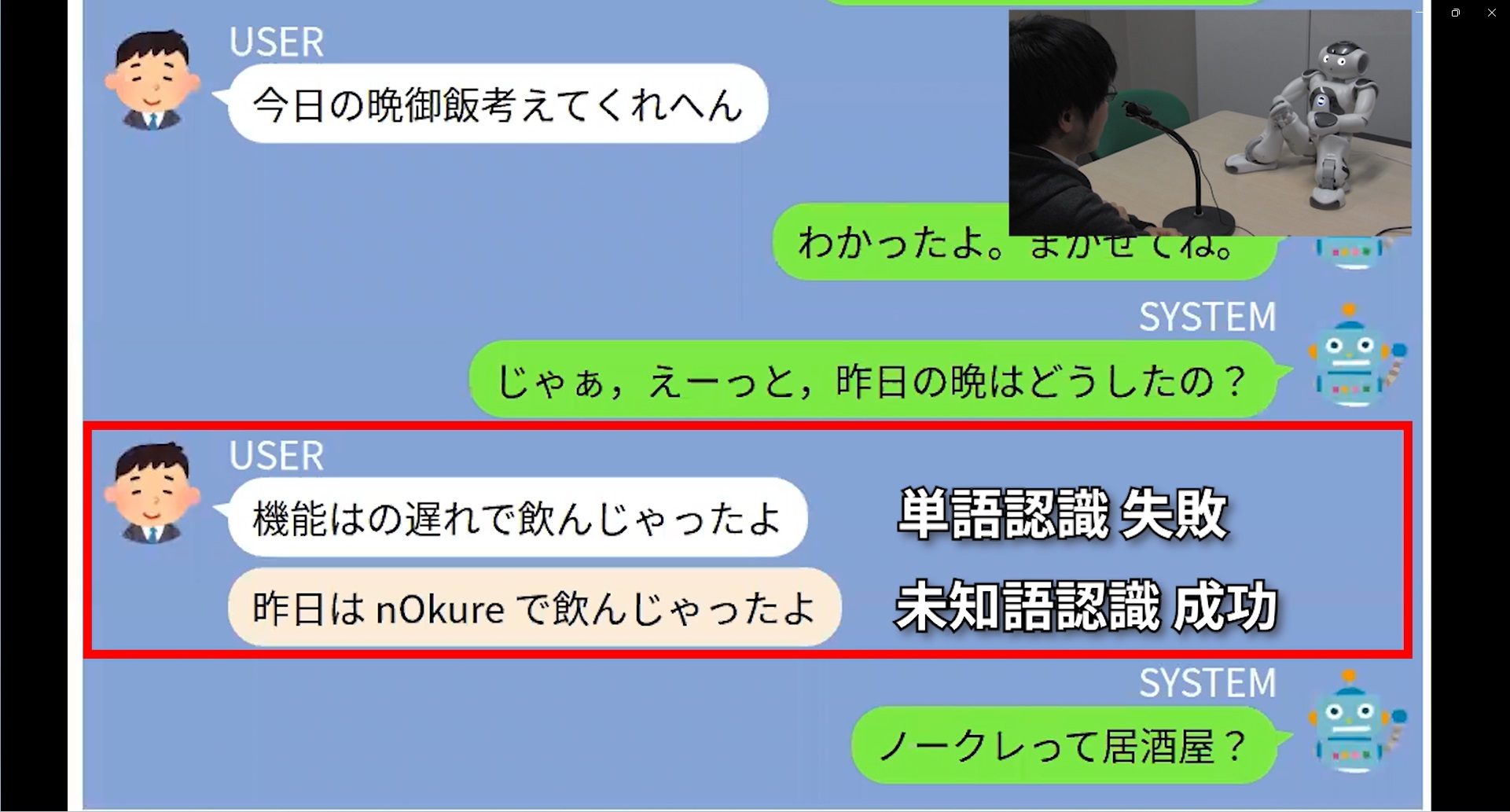

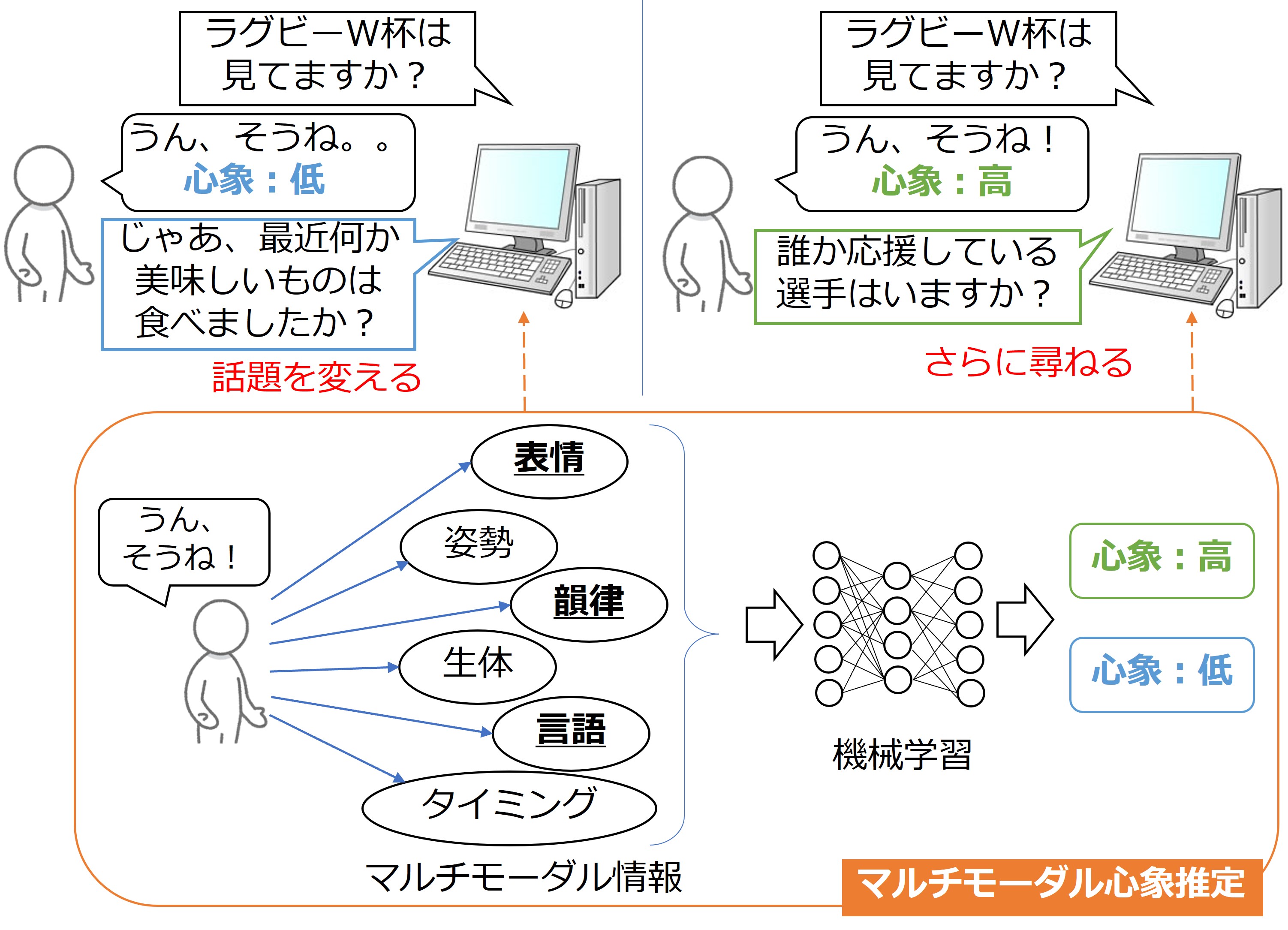

To make machines accessible and easy to use for humans, it is desirable for machines to have the spoken dialogue capability that humans have innate in them. In our laboratory, we study systems that can interact with humans using speech, language, and multimodal information. Recently, the technology for generating coherent word sequences has evolved considerably with the rise of generative AI, but we have not yet realized enough spoken dialogue capability that would allow machines to become symbiotic partners with humans. Research is needed not only to make the system respond reactively, but also to understand the humans themselves with whom the system interacts and to understand the phenomenon of dialogue through speech more. Our goal is to realize spoken dialogue capabilities that enable machines to become symbiotic partners with humans from a wide range of perspectives, including acoustic signal processing, speech recognition, natural language processing, knowledge graphs, multimodal interaction, user modeling, and social psychology.

| 2014.4 -- present |

Professor at SANKEN, Osaka University |

| 2010.6 -- 2014.3 |

Associate Professor at Graduate School of Engineering, Nagoya University |

|

(2010.10 -- 2014.3) |

(Researcher, JST PRESTO "Information Environment and Humans" Project) |

|

2002.12 -- 2010.5 |

Assistant Professor at Graduate School of Informatics, Kyoto University |

|

(2008.6 -- 2009.5) |

(Visiting Scientist at Department of Computer Science, Carnegie Mellon University) |